The chance of a lifetime for an SEO practitioner to speak with a former Google Webspam employee happened this morning (March 17, 2015) resulting in pages of notes and insights. While we can’t divulge the names of our contact, we will say they played a major role in the foundation of filters and rules still in place today. Hang on to your seats folks, a decent percentage of our notes from the interview are in this post.

Technical SEO Best Practices

We’ve been hard at work putting together a comprehensive SEO Roadmap strategy for small and mid-sized businesses, and wanted to know if we were going down the right path; so we gave our new friend a copy of our digital marketing checkup, an audit list we’re using to build the new website as a starting point.

Our goal: to see where our roadmap might be lacking. I also wanted to find out what items (if any) were irrelevant and could be removed. As it turns out, we just about nailed it. Here are the results from a sampling of the list we discussed:

- Site offers a mobile-friendly design – obviously, yes (announced Feb 26, 2015)

- 404 crawl error handling – very important

- Breadcrumbs – very important

- Consistent internal linking and canonical URLs – very important

- robots.txt not blocking style sheets or scripts – important

- Structured markup (Microdata, Schema.org) – important

- Consistent XML sitemap – important

Breadcrumbs Discussed

The way Google describes it,

A breadcrumb trail is a set of links that can help a user understand and navigate your site’s hierarchy, like this:

Webmaster Tools › Help articles › My site and Google › Creating Google-friendly sites

As a consultant, it’s been difficult to get buy-in from some of the clients we work with on the importance of breadcrumbs.

In our experience, breadcrumbs have provided a simple way to increase anchors and anchor text to critical (often broad) category or parent pages. Attributes we infer are important since they list the most linked to pages from within Google Webmaster Tools. The support page itself clearly states:

“The number of internal links pointing to a page is a signal to search engines about the relative importance of that page.”

Our former Google Webspam Team member suggested that we move up breadcrumbs in our prioritization of technical SEO tasks, and that we make them more “structured” for efficient cataloging of content categories. The other recommendation was to not use schema.org/breadcrumb as it’s still not supported (there is a bug that hasn’t been fixed). Instead, Google recommends using RDFa, as shown below:

If properly executed, a webmaster can see visual breadcrumbs within Google search results, and later, the respective increase in click-through rate from within Google Webmaster Tools.

The latter is a behavioral signal often snuffed by many notable SEO’s as not being important to ranking. Our data and experiments prove differently.

Here is a screenshot of what breadcrumbs could like within search results:

Internal Linking

I’ve always believed that internal linking in general, not just breadcrumbs, are an important signal to search engines in determining importance and for passing PageRank.

In 2014, many of my peers published results from experiments where they increased internal links and varied anchor text with little to no change in search engine ranking. I almost gave up hope.

According to the former Webspam Team member we spoke with, internal links are still very important. You can prove this by reviewing the quantity of organic and overall traffic pages receive when internal linking is given emphasis.

However, linking alone isn’t effective unless consistent URLs are used in anchors. In other words, each internal link should be the exact same URL as the canonical tag of the page being linked to, else Googlebot has to think. Don’t make Googlebot think.

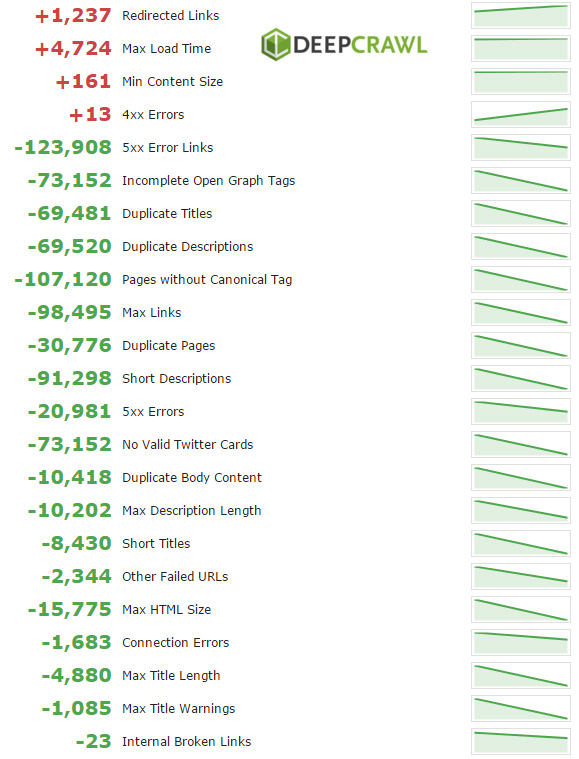

I discussed a tool we’ve been using recently called Deepcrawl. Our new friend recognized the tool as one of the most adopted and also suggested we try a tool called Botify with similar pricing, which we moved away from eventually. Even more recently, another friend of ours, Nikita Sawinyh, suggested Seomator, which I’m looking forward to trying.

I’m still partial to Deepcrawl, mostly because of the ability to trend improvements over time, but also because the tool will incorporate data from your XML files, Google Analytics and a manual crawl of your website. Crawl doesn’t get much more comprehensive than that.

Below is a screenshot to give you an idea of what I’m referring to in terms of trending improvements.

Deepcrawl Report Example:

So aside from the refreshing confirmation that internal links are still important, it was nice to be reminded of the value of keeping links consistent; meaning eliminating 301 redirects, excessive URL parameters (or any unneeded URL parameters), and keeping things simple in a nutshell.

I also asked about link value within global navigation, such as top, sidebar and footer navigation.

Our understanding is that Google identifies these similar “blocks” and gives less weight to links within them, therefore giving more weight to links within the body area of a webpage.

We were right again (on a roll here). The terms “repeated link” and “section-wide link” were brought up, and yes, less PageRank flows through these navigational links in general.

Content SEO Best Practices

Unfortunately, we ran out of time and I didn’t get all my content questions answered.

However, I did pose the question of whether or not we’re going to completely lose control over the ability to have our titles appear within search results.

We wrote amazing titles for a franchise account recently, based on AdWords ad CTR data, only to have Google replace our amazing new titles with “service name – entity name.” Frustrating, right? See example below.

It turns out we can somewhat influence what appears in titles within search results by borrowing search terms from Webmaster Tools (search query data > top pages), since Google wouldn’t display your page for these queries if they didn’t believe your page was relevant to them.

Another fun idea I came up with (experiment to come), is to modify my internal links and a few external links with the entire phrase I’d like to see in the SERPS, since they obviously use those from past comparative experiments we’ve ran in the past.

What Impact Does Social Media Have on SEO?

You’re probably exhausted from the rhetoric of correlation does not equal causation, but sorry, we have to lay it on you again. Links and social engagements do not likely factor into Google ranking signals, sorry folks.

However (here comes the rhetoric), with the right amount of influencer marketing, followers of authoritative people are more likely to blog about, mention and (here it comes) link to your content, which can have an impact on ranking.

Additionally, there “may be” a freshness signal if Google is finding new content mentioned and shared across social media channels. There’s no data to support this hypothesis, but the basic idea is that the freshness itself is the signal, not the link, not the mention, not the engagement, just the freshness.

Therefore, be active on these sites by sharing helpful content, concepts, and individual proactive support:

- Reddit – with Caution

No mention of Google+ – interesting.

Our former Googler also recommended we find students in a program within our industry and start somewhat mentoring them in a way that results in brand loyalty, a constant flow of fresh content, and continuous growth in relevant followers or subscribers.

Genius.

There was no disagreement on our suggestion to include the most relevant 2-3 keywords within the byline or description area of the social media profile (or page) itself.

Our tests show a very minor lift for smaller campaigns when Google sees an entity mentioned next to a keyword frequently, especially if the page with the co-occurrence is linked and crawled to frequently (as social media profiles often are).

Action Items: Add your most important keywords to your byline, be helpful in important social media channels, and learn influencer marketing like it’s your new religion.

Google Patents and Spam Reports

Our friend Bill Slawski, SEO by the Sea, has us addicted to following Google Patents now, and I love it!

Here’s the good news: it’s apparently taking years for Google to get patents approved and most of the time they are just ideas. The reason for the patent is simply to prevent Bing and other engines from filing for patents on these ideas before Google does.

So don’t stress over patents, but definitely feel free to follow our obsession and blame Bill if you become an addict. Who knows which of these ideas will help us understand the direction Google is thinking of going in long term. (We love you @bill_slawski)

Spam reports work.

Let me repeat that, spam reports work. We know this because we talked with one of the former Google Webspam Team members personally, today.

However, you need to take a deep breath before starting your rant in a spam report and take the appropriate amount of time to do your research. Reporting your competitors for violating Google search quality guidelines only works when your report is actionable and compelling.

This means, you’ll need to specify which guidelines the competitor is violating, show examples and really do your best to provide as much evidence as possible when you plea your case.

Got More Questions You’d Like Us to Ask?

Let us know if this interview was helpful and what questions you’d like us to ask if we get the opportunity to have another meeting with our new friends. Thanks for reading!